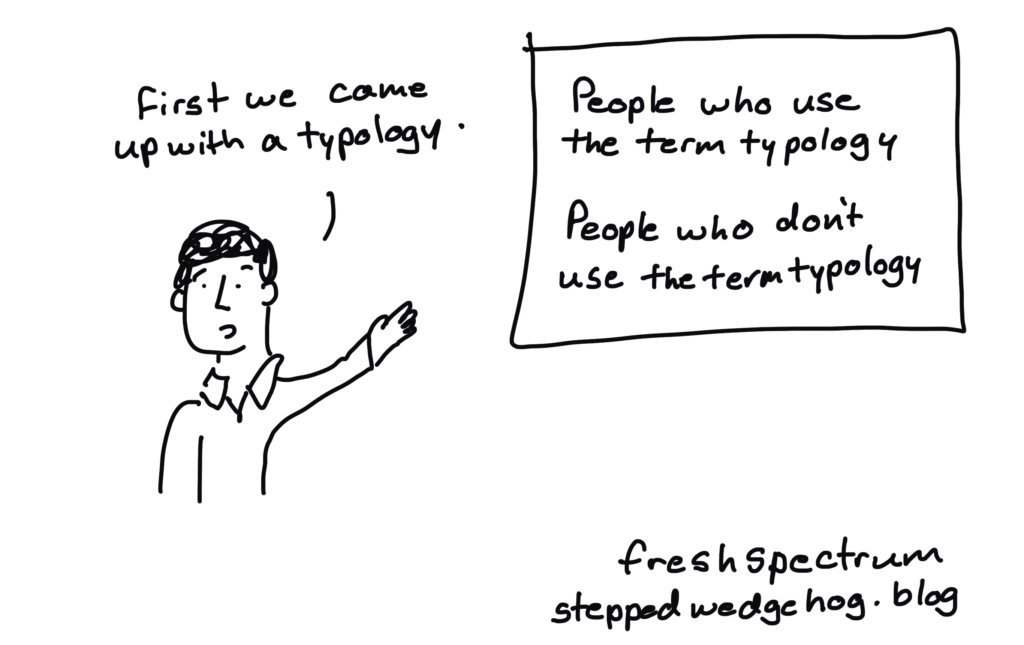

After you have read a few published examples of stepped wedge trials you begin to realise that the term “stepped wedge” covers a surprisingly rich variety of research designs. Trying to divide anything into categories is often a fool’s errand or wild-goose chase, but since I can’t resist a typology this post attempts to distinguish three common types of stepped wedge trial: the cohort, the repeated cross-section, and the continuous recruitment design. (For a more basic introduction to stepped wedge trials you may prefer to try this post first, and for an introduction to randomised controlled trials see this post.)

Setting the scene

In the post What is a stepped wedge trial? I imagined a cluster randomised trial where all the clusters (the hospitals, general practices, local communities, or whatever those “clusters” are) are ready to be randomised at the same time, and I pointed out that if you just took longer over the trial you might be able to collect more data. This might be because eligible participants for your study are passing by in a constant stream, and the longer you spend recruiting the more people you can include from this stream of eligible participants. Or it might be that by giving yourself a more relaxed timetable you have the chance to go back and revisit the same clusters on a number of separate occasions. Let’s think about the latter situation first.

Revisiting clusters

Revisiting the same clusters on a number of separate occasions can be hugely advantageous. It’s not just that you can collect more data. In a stepped wedge design you can also use a cluster as its own control – that is, insofar as you believe that people in some clusters generally have better health outcomes than people in other clusters, you can allow for this by comparing a cluster’s outcomes after the intervention has been introduced with the same cluster’s outcomes assessed on some occasion before the intervention was introduced (see also Is a stepped wedge trial really a randomised controlled trial?).

Now, if you return to a cluster you visited on a previous occasion and find the same people there (and if you can assess those same people’s outcomes again) then this is even better – each person can now act as their own control – you’re not just assuming that the cluster’s characteristic performance will be reflected in a different group of people from that cluster.

Cohort stepped wedge trials

When you follow a group of people over time, measuring their health outcomes at specific intervals, it is known in research design as a “cohort” (which always makes me think of the ancient Roman cohorts of soldiers that made up a Legion – as in “The Assyrian came down like a wolf on the fold, And his cohorts were gleaming in purple and gold”.) So, a stepped wedge trial in which you revisit the same clusters in different “periods” of the trial, and consequently end up measuring some or all of the same people’s outcomes on more than one occasion (some occasions before the intervention has been introduced, and others after), is called a cohort stepped wedge trial. This is the first of my three kinds of stepped wedge trial.

Open and closed cohorts

If you can measure the outcomes of exactly the same people each time you visit a cluster, then this is called a closed cohort stepped wedge trial. This may be a slightly idealised or unrealistic scenario. It might seem more reasonable in general to imagine that people will come and go from the cohort over time. That is, on two separate occasions when you visit the same cluster, it seems more realistic to expect that some of the people whose outcomes you measure will be the same people on both occasions, while others will be different. This is called an open cohort stepped wedge trial – the cohort is “open” in the sense that people can come and go from it.

Because closed cohorts are in some ways neater and easier to conceptualise (and perhaps easier to analyse) than open cohorts, researchers may deliberately design or engineer their stepped wedge cohorts to be closed rather than open. In one stepped wedge trial of a tool for allowing general practices to create registers of patients at high risk of emergency hospital admission, for example, the researchers only followed patients who were registered with practices at the start of the trial and whom they could track during the course of the trial. Some of these patients did leave their practice before the trial was complete, and therefore could not be followed any longer, but they were not replaced in the trial with new patients from the same practice.

Repeated cross-section stepped wedge trials

Now, imagine an open cohort where there is such a high degree of “churning” (a lovely word) of the members of the cohort between visits that it is unlikely you will ever see the same person twice. Or imagine clusters that are so large or populous that researchers don’t attempt to measure everyone’s outcomes in a cluster, but instead survey a small, random sample of them at each visit – so that once again there is a negligible chance of seeing the same person twice.

This is no longer a cohort at all, and needs a different name. The sample of individuals from a cluster who are assessed at each visit is a snap-shot or “cross-section” of the individuals characteristic of that cluster. Hence this sort of design is called a repeated cross-section stepped wedge trial. This is the second of my three kinds of stepped wedge trial.

A great example of a repeated cross-section design is the Devon Active Villages Evaluation, or DAVE trial (also a great acronym). The intervention evaluated in this trial was aimed at increasing physical activity, and was delivered at community-level and tailored to the local community. 128 rural villages in the UK county of Devon were randomised to four different sequences, or schedules for implementing the intervention, in a stepped wedge pattern. The villagers’ physical activity levels were assessed by postal survey at five fixed time-points throughout the trial. On each occasion a random sample of people from each village were selected to receive the postal survey.

Continuous recruitment stepped wedge trials

So far I’ve talked about what happens in the case where extending the duration of your cluster randomised trial means going back and revisiting your clusters on a number of separate occasions. What if the people who take part in your trial arrive (and then leave again) in a continuous stream, and extending the duration of your trial just means spending longer over recruitment so that you include more people from this continuous stream of eligible participants? This is called a continuous recruitment stepped wedge trial, and is the third of my three kinds of stepped wedge trial.

An example of a continuous recruitment stepped wedge trial is the Gambia Hepatitis Intervention Study (the first stepped wedge trial). Here the individual participants were newborn infants in the Gambia, and clusters were the regional immunisation teams. New, eligible participants (newborn infants) arrived at a fairly steady rate, as is natural. In any continuous one-year period of recruitment the researchers expected to recruit around 30,000 children into the study, and by scheduling the trial over a total of four years they hoped to see 120,000.

As in a cohort or repeated cross-section design, the extended timescale of a continuous recruitment design gives you the opportunity to introduce the intervention to a cluster while the trial is running. Thus there may be periods where you are recruiting people before the intervention has been introduced at a cluster, and periods where you are recruiting people after the intervention has been introduced.

Here, once again, as an example, is the diagram of recruitment to the Gambia Hepatitis Intervention Study:

Continuous vs discrete

Continuous recruitment designs differ in an important way from cohort or repeated cross-section designs: in a continuous recruitment design the “periods” of time are not distinct time-points with clear water between them, but instead run one into another without a break. Time is one continuous process rather than a series of discrete occasions. This idea is explored at greater length in this paper.

Nevertheless, continuous recruitment designs and repeated cross-section designs do share one important feature: that each participant is only assessed once. Perhaps for this reason, the term “repeated cross-section” is sometimes used interchangeably for both. Personally, I much prefer to say “continuous recruitment” when this is the case, as a “cross-section” reminds me inescapably of a slice through the salami of time, with repeated cross-sections being repeated slices, whereas continuous recruitment is continuous recruitment.

Which type of design to use

Note that it isn’t usually a question of deciding which of the three kinds of stepped wedge trial – cohort, repeated cross-section or continuous recruitment – is “best” for your trial. Instead, the context generally drives the approach.

Suppose you want to study the effects of an intervention in schools – this might be a healthy eating programme or exercise intervention, for example. Suppose you wanted to study one year-group of school-children in particular (10-11-year-olds, say). Over the course of a school-year, the membership of this year-group at a school will not change much – kids don’t join or leave the school part-way through a school-year, as a rule. Thus a stepped wedge trial conducted in schools over a single school year could take advantage of this captive cohort, and assess the same schoolchildren once in each term, say (see here for a real-life example). This would be a closed cohort stepped wedge trial.

But now imagine you plan to revisit your schools once every school-year over a period of more than one year (see here for an example). Now the membership of a particular year-group will have changed at each school from one year to the next. Each yearly visit offers you a different cross-section of the students who attend each school, and a stepped wedge trial following this plan would be a repeated cross-section stepped wedge trial.

Final thoughts

I began this discussion by acknowledging that categorisation is hard. Real examples of trials may not always fit perfectly into one idealised category. Still, I think that by looking for commonalities and differences in the ways that different trials recruit or identify their participants, you can reach a much deeper understanding of stepped wedge trial design in particular, and of research design in general.